Learning Machine Learning: Beyond the Hype

Image courtesy of Intersection Consulting

Terran Melconian and I recently published a piece on KDNuggets that clarifies some important distinctions between machine learning and data science and discusses implications for job seekers.

As two experienced data science leaders, we wrote this article to provide a underrepresented perspective on the widespread misunderstanding that machine learning is the best education for early career data scientists. This misunderstanding has a significant adverse impact on a large number of students, job seekers, companies, and educational institutions. We see this misunderstanding arise frequently in our own work, and have gathered similar feedback from several peers.

You can read the full article on KDnuggets here: Learning Machine Learning vs Learning Data Science; the text is also reproduced below.

Learning Machine Learning vs Learning Data Science

We clarify some important and often-overlooked distinctions between Machine Learning and Data Science, covering education, scalable vs non-scalable jobs, career paths, and more.

By Terran Melconian, enterpreneur and consultant, and Trevor Bass, edX

When you think of “data science” and “machine learning,” do the two terms blur together, like Currier and Ives or Sturm and Drang? If so, you’ve come to the right place. This article will clarify some important and often-overlooked distinctions between the two to help you better focus your learning and hiring.

Machine learning versus data science

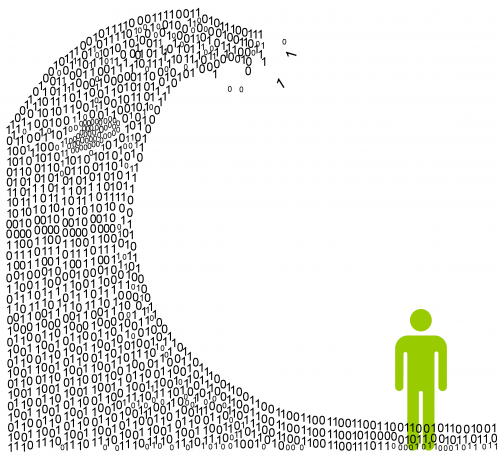

Machine learning has seen much hype from journalists who are not always careful with their terminology. In popular discourse, it has taken on a wide swath of meanings and implications well beyond its scope to practitioners. Machine learning refers to a specific form of mathematical optimization: getting a computer to perform better at some task, through training data or experience, without explicit programming. This often takes the form of building a model based on past cases with known outcomes, and applying the model to make predictions for future cases, finding ways to minimize a numerical “error” or “cost” function representing how much the predictions mismatch reality.

Notice that some important business activities appear nowhere in this definition of machine learning:

- Assessing whether data is suitable for a purpose

- Formulating an appropriate objective

- Implementing systems and processes

- Communicating with disparate stakeholders

The need for these functions led to the recognition of data science as a field. The Harvard Business Review tells us that the “key skills for data scientists are not the abilities to build and use deep-learning infrastructures. Instead they are the abilities to learn on the fly and to communicate well in order to answer business questions, explaining complex results to nontechnical stakeholders.” Other authors agree: “We feel that a defining feature of data scientists is the breadth of their skills – their ability to single-handedly do at least prototype-level versions of all the steps needed to derive new insights or build data products.” Another HBR article affirms, “Getting value from machine learning isn’t about fancier algorithms – it’s about making it easier to use…. The gap for most companies isn’t that machine learning doesn’t work, but that they struggle to actually use it.”

Machine learning is an important skill for data scientists, but it is one of many. Thinking of machine learning as the whole of data science is akin to thinking of accounting as the entirety of running a profitable company. Further, the skills gap in data science is largely in areas complementary to machine learning – business sensibility, statistics, problem framing, and communication.

If you want to be a data scientist, seek out an interdisciplinary education

It is no secret that data scientists are in high and increasing demand. Despite this, much of the most hyped educational programming in data science tends to be concentrated in classes teaching machine learning.

We see this as a significant problem. Many students have focused far too heavily on machine learning education over a more balanced curriculum. This has unfortunately led to a glut of underprepared early-career professionals seeking data science roles. Both of the authors, and several other data science hiring managers with whom they spoke when preparing this article, have interviewed numerous candidates who advertise their knowledge of machine learning but who can say little about basic statistics, bias and variance, or data quality, much less present a coherent project proposal to achieve a business objective.

In the authors’ experience, software engineers seem especially susceptible to the siren’s call of an education too rich in machine learning. We speculate that this is because machine learning uses the same type of thinking that already comes easily to software developers: algorithmic, convergent thinking with clearly defined objectives. An education that is hyper-specialized in machine learning offers the false promise of more interesting work without demanding any fundamental cognitive shifts. Sadly, the job market rarely delivers on this promise, and many who follow this path find that they are unable to make the career shift from engineer to scientist.

Data science demands learning a different style of thought: often divergent, poorly defined, and requiring constant translation in and out of the technical sphere. Data scientists are fundamentally generalists, and benefit from a broad education over a deep one. Interdisciplinary study is a far better bet than a narrow concentration.

Scalable versus non-scalable jobs

Most organizations will generate significantly more value by hiring generalist data scientists before machine learning specialists. To understand why this is the case, it is useful to appreciate the difference between scalable and non-scalable jobs.

Creating general purpose machine learning algorithms is a scalable job – once somebody has designed and implemented an algorithm, everybody can use it with virtually no cost of replication. Of course everyone will want to use the best algorithms, created by the best researchers. Most organizations cannot afford to hire top-tier algorithm designers, many of whom receive seven-figure salaries. Thankfully, much of their work is available to the public in research papers, open source libraries, and cloud APIs. Thus the world’s best ML algorithm designers have an outsized impact, and their work enables the generalist data scientists who use their algorithms to have a large impact in turn.

Conversely, data science is a far less scalable activity. It involves understanding the specifics of a particular company’s business, needs, and assets. Most organizations of a certain size need their own data scientist. Even if some other company’s data scientist had published their approach in detail, it’s virtually certain that some aspects of the problem and situation will differ, and the approach cannot just be copied in toto.

Of course, there are many highly worthwhile and interesting paths for a career other than data science. In case you are thinking of a career more specifically in machine learning, here’s one of the dirty secrets of the industry: Machine Learning Engineers at large companies actually do very little machine learning themselves. Instead, they spend most of their time building data processing pipelines and model deployment infrastructure. If you do want one of these (often excellent) jobs, we still recommend focusing a minority of your education on machine learning algorithms, in favor of general engineering, DevOps practices, and data pipeline infrastructure.

While the world’s best machine learning expert may be able to contribute more to the grand sum of human knowledge than the world’s best data scientist, a skilled data scientist can have an outsized impact in a much broader range of situations. The job market reflects this. If you are seeking employment, you will likely do best by consuming machine learning education as just one part of a balanced diet. And, if you are looking to make your company more data driven, you will likely do best by hiring a generalist.

Counter to the hype, amassing machine learning education beyond the basics without also skilling up in complementary areas has diminishing returns on the job market.

Bios

Terran Melconian has led software engineering, data warehousing, and data science teams and both startups and industry giants including Google and TripAdvisor. He currently guides companies starting their first data science efforts, and teaches data science (not just machine learning!) to software engineers and business analysts.

Trevor Bass is a data scientist with over a decade of experience building highly successful and innovative products and teams. He is currently the Chief Data Scientist at edX, an online learning destination and MOOC provider offering high-quality courses from the world’s best universities and institutions to learners everywhere.

After Hours Marketing podcast: “The Subtle Differences Between Reporting and Analytics”

I was recently interviewed by entrepreneurial B2B digital marketing strategist Greg Allbright for the third episode of his newest podcast, After Hours Marketing. The episode, titled “The Subtle Differences Between Reporting and Analytics,” follows this recent post on the subject. We also touched on the CRAPOLA design principles for quantitative data. If you’d like to listen, the recording is available here.

Seven ways to avoid the seven month data science itch

Image courtesy of Relationships Unscripted

It is a story as old as data science: company has valuable data asset, company hires data scientist, data scientist finds insights, company is thrilled, company can’t figure out what to do next. The months after a company’s data first goes under the microscope can be an exciting time, but as initial goodwill fades the thrill of young love can turn into a seven month itch.

The itch often starts as a mere tickle, but indicates very serious underlying issues. If you have heard the question “how can we use data science here?” around the office more than once, it is likely that your organization is about to enter the trough of disillusionment. Seemingly small problems have a way of revealing themselves to be much larger underneath; the seven month itch can be deadly to a company’s data science efforts.

The seven month itch is felt by both the data science team and its collaborators. The result is a lack of effectiveness, though the perceived root causes differ across the aisle.

Data scientists typically see the seven month itch as a lack of business prioritization and engineering resources to bring their vision to fruition. They often have a seemingly endless stream of ideas and are able to find promising insights in the data, but see the business as unsupportive. This is probably the most common issue expressed by young data science teams.

Collaborators of the data science team, on the other hand, typically see the seven month itch as a lack of practicality and business savvy on the part of the data scientists. Data scientists are frequently derided for being too academic, for privileging theoretical interest over practical relevance. This is often the case.

Both data scientists and their collaborators feel they are not getting what they need from the other party.

For product-facing data science teams, for example, product design is a common area where each side feels the other should have most of the answers. Data scientists assume product managers should be able to spearhead the translations of their predictive models into functioning systems, and product managers assume data scientists should guide the product realization process. In reality, building data products is best done as a team effort that incorporates a variety of skillsets (see, for example, DJ Patil’s Data Jujitsu: The Art of Turning Data into Product).

Communication disconnects – the primary source of the seven month itch – are widespread and detrimental. Generally scrappy folks, data scientists often find ways to scratch the itch. But treating the underlying cause is the only effective long term solution.

As a start, we propose seven things that data science teams and their managers can do to avoid the itch altogether. While these suggestions are targeted toward fairly young teams that are product facing, they should be generally applicable to more experienced and decision support oriented teams as well. These recommendations can help align the business and data science stakeholders, justify dedicating company-wide resources to data science projects, and generally innovate more effectively.

#1: Tie efforts to revenue

Tie data science efforts directly to revenue. Find ways to impact client acquisition, retention, upselling, or cross-selling, or figure out ways to reduce costs. There are invariably countless ways to do this, and the method makes less difference than the critical pivot of allowing data science to be a revenue generator rather than a cost center. Executive sponsorship is critical for the success of data science efforts, especially due to their interdisciplinary and collaborative nature. Some organizations may highly value making progress toward other critical business goals, and making a dent in such goals can be a perfectly valid substitute, but focusing on projects that will impact the company’s bottom line is the surest universal way to get the attention of senior management.

#2: Generate revenue without help

Generate revenue streams prior to requesting any engineering effort. Charging for one time or periodic reports or analyses delivered via Excel or PowerPoint, for example, can be useful in getting attention and buy-in from the rest of the organization.

#3: Strengthen the core

Focus first on improving the organization’s core products, the products that generate most of the revenue. The numbers are bigger there, so having a bigger revenue impact is easier.

#4: Strengthen client relationships

Generate a more consultative relationship with clients through analytics, which will make data science indispensable to account managers and have the potential for many revenue related benefits. For example, do one off analyses for clients to help them improve their use of the product or alleviate other pain points, and track progress closely. Sometimes these partnerships will generate big wins for clients, and sometimes they will be generalizable. As a result, they can be an excellent source of battle-tested business cases, which stand a better chance of getting funded.

#5: Kickstart big projects with quick wins

Don’t forget about the low hanging fruit. Data scientists are often tempted by the hard problems, but quick wins can help justify a longer leash. Instead of going straight to creating robust decision recommendations for clients, for example, surface some basic data visibility to support their decisions, or give them A/B testing capability if applicable. This first step may not be interesting work from a data science perspective, but is can establish a new client need (e.g. “I don’t know what to do with this information or even what to A/B test; help me optimize this decision”) that data science can then step in and solve. An iterative approach works very well for developing data (and many other types of) products.

#6: Measure customer value

View your products in the light of customer value. You can often justify high prices for data products because they add demonstrable value for clients. Customer value can be data science’s best friend; giving the business a baseline level of visibility into customer value can open new worlds, and should probably be an even higher priority than building any specific data product. Create customer value metrics for the business, separately tracking core products and products that add measurable value. Ideally, give an executive responsibility for goals based on these metrics.

#7: Measure innovation

Create innovation metrics for the business, such as the percentage of revenue from products that are at most one year old, or the percentage of revenue from products that draw their value from analytics. Ideally, give an executive responsibility for goals based on these metrics.

Many organizations have most of the elements necessary to benefit greatly from data science. These recommendations should help provide a common language between the data science team and its collaborators, which is all that is necessary to avoid the seven month itch. No scratching needed.

The law of small numbers

Big Mark, a new manager at Statistical Misconception Corporation, recently hired his first ever direct report, Little Mark. Little Mark turned out to be a star contributor. The company’s CEO, Biggest Mark, noticed one of Little Mark’s landmark accomplishments, and quickly earmarked another hire for Big Mark. However, he remarked, “You’ve really lucked out with Little Mark, who set a high benchmark. Less than half of new hires make such a marked impact, so according to the law of averages your next hire will miss the mark.” Big Mark raised a question mark: is Biggest Mark’s reasoning on the mark?

The law of averages is an imprecise term, and it is used in many different ways. It is one of the most popularly misused applications of statistical principles. Biggest Mark’s interpretation of the law of averages is fairly common – that outcomes of a random event will even out within a small sample. Let’s discuss two of the main problems with Biggest Mark’s reasoning:

First, the sample size is too small. In probability theory, the law of large numbers (which is likely the seed that is misinterpreted into the law of averages) states that the average of the results obtained from a large number of independent trials should come close to the expected value, and will tend to become closer as more trials are performed. For example, the likelihood that a flipped penny lands on heads is 50%, so if you flip it a large number of times then roughly half of the results should be heads. This law doesn’t say anything meaningful about small sample sizes – it’s the law of large numbers, not the law of small numbers. In fact, if you flip a penny twice, there is only a 50% chance that you will end up with one head and one tail; the other half of the time you’ll end up with either two heads or two tails. For 10 flips, about a third of the time you’ll end up with at least 7 heads or 7 tails.

Second, the law of large numbers is only applicable if each event is independent of all the others. In probability theory, two events are independent if the occurrence of one does not affect the probability of the other. In our case, invoking the law of large numbers would necessitate that the likelihood of Big Mark’s second hire being excellent is unaffected by the performance of his first hire.

Back at Statistical Misconception Corporation (trademark), Biggest Mark has a business sense second to none. While attending college in Denmark, where his maximum marks were in marketing, he became known as Business Mark, or Biz Markie for short. (As a side note, his relationship with this girl from the U.S. nation, Beauty Mark, ended tragically when he found out that Other Mark wasn’t just a friend.)

In the end, it turned out that Big Mark’s next hire, Accent Mark, defied the CEO’s curse. Instead of making a skid mark, he set a new watermark for what a Mark should be – he was one of the markiest Marks the company had ever marked.

#HashMark

The red and green rule

Image courtesy of auroragov.org

Early in my career, I produced many data visualizations for a senior executive. Let’s call him Gordon. Gordon is unabashedly a man of strong convictions. One of his most strongly and repeatedly voiced was that, in any data visualization, the color green had to represent good and the color red had to represent bad. And there always had to be good and bad. No exceptions.

I quickly caught on, and for the work I did for him I began abiding by his iron red and green rule. Nevertheless, he reminded me of it several times, asking whether red meant good and green meant bad in the visualizations I produced, despite my answers being uniformly in the affirmative.

At first, I attributed his frequent questions to a lack of trust; in fact, it was one of the only factors contributing to my (slight) perception of a lack of trust in our relationship.

Then, one day, I was sitting in Gordon’s office, while a dashboard brimming with my red and green charts filled his computer screen. He was one of the primary users of this particular dashboard, and was quite familiar with its content. He asked me a question that, for the first time, took me by surprise: “are these charts red and green?”

That’s the moment I realized that the frequent questions were not about trust. Gordon is colorblind.

Why would someone with red-green colorblindness want reports of which he is a primary user in red and green?

It turns out that Gordon picked up this conviction while working for a (colorseeing) CEO who insisted on red and green in his charts. Once acquired, it became a hard and fast rule, part of Gordon’s data visualization grammar.

We all have strong convictions. In the world of data visualization, convictions are often influenced by poor conventions set over decades, such as by lay users of Microsoft Office. The proliferation of 3D pie charts is more about convictions and conventions than about good data visualization.

The next time you’re creating a data visualization, apply your own critical thinking rather than relying on conventions. Consider the best way to surface and communicate the information. Critical thinking, not following conventions, is the path to creating the best data visualizations.